Explainable AI in Special Education: Making Technology Understandable for Every Child

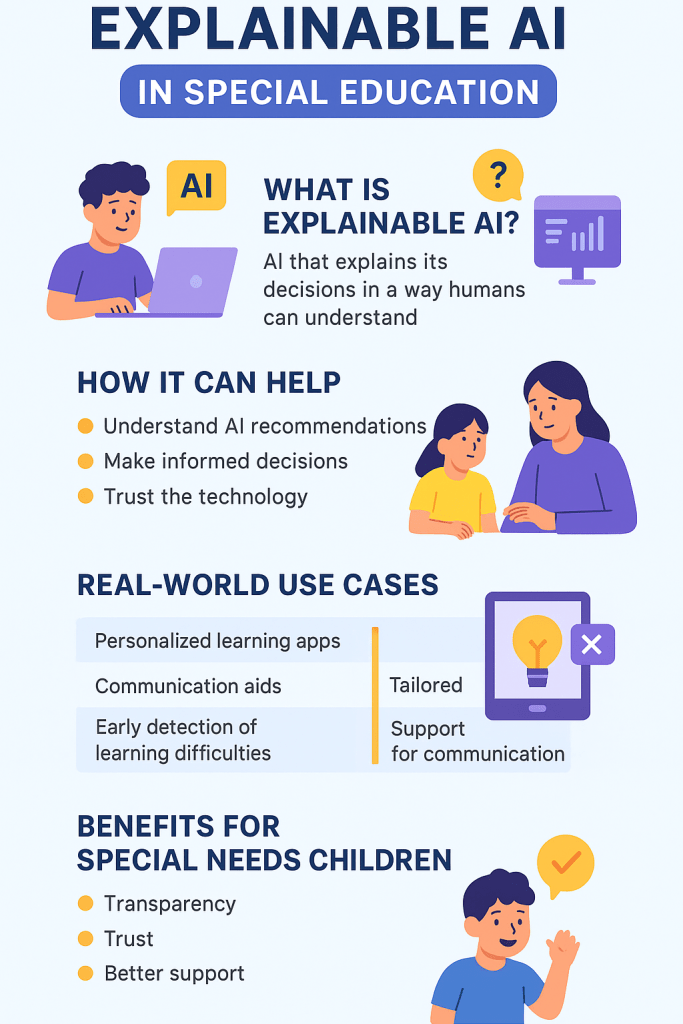

Artificial intelligence is becoming a regular part of classrooms and therapy sessions, especially for children with special needs. But one of the biggest concerns parents and teachers have is why the AI suggests certain actions. This is where explainable AI comes in. Instead of being a “black box” that gives answers without context, explainable AI shows the reasoning behind its decisions in a way people can understand. For children with special needs, this isn’t just about convenience — it’s about building trust, transparency, and creating more effective learning experiences.

- What Is Explainable AI? 🤔

- Why Explainable AI Matters in Special Education 🌟

- Real-World Use Cases of Explainable AI in Special Education 🏫

- 1. Personalized Learning Apps

- 2. Communication Aids

- 3. Early Detection of Learning Difficulties

- 4. Emotional Support Tools

- Benefits of Explainable AI for Special Needs Children 💡

- Table: Standard AI vs. Explainable AI in Special Education

- Example Scenario: Reading Practice 📖

- The Research Backing Explainable AI 🧠

- Challenges of Explainable AI 🚧

- How Teachers and Parents Can Use Explainable AI 👩🏫👨👩👧

- Looking Ahead 🌍

- Final Thoughts 🌟

- FAQs

What Is Explainable AI? 🤔

Explainable AI (often called XAI) refers to artificial intelligence systems that not only provide outputs but also explain the reasoning or data behind them. For example:

- A standard AI app might recommend a reading activity without saying why.

- An explainable AI app would add: “This activity is recommended because the child performed well with visual word recognition but struggled with phonics last week.”

This added layer of explanation makes it easier for teachers, therapists, and parents to understand what’s happening and how to support the child.

Why Explainable AI Matters in Special Education 🌟

Children with disabilities often need personalized learning paths. With explainable AI:

- Teachers can see why certain lesson plans are being recommended.

- Therapists can better track progress and adjust interventions.

- Parents can feel reassured that the technology is aligned with their child’s real needs.

Transparency is key. Without explanations, recommendations can feel random or confusing. With them, every stakeholder can collaborate more effectively.

Real-World Use Cases of Explainable AI in Special Education 🏫

1. Personalized Learning Apps

- Apps that adjust reading levels or math difficulty for students with learning disabilities.

- With explainable AI, the app clarifies: “The math problems are easier today because the student showed frustration with multi-step problems yesterday.”

2. Communication Aids

- Speech-generating devices often predict words or sentences for non-verbal children.

- Explainable AI helps caregivers understand why certain word suggestions appear, e.g., “The system suggested ‘water’ because the child usually requests it at this time of day.”

3. Early Detection of Learning Difficulties

- AI can flag potential dyslexia or ADHD risks by analyzing patterns in a child’s work.

- Explainable AI makes it clear: “We flagged dyslexia risk because the student consistently reversed letters in 70% of recent writing samples.”

4. Emotional Support Tools

- AI-driven apps analyze facial expressions or tone of voice to identify emotions.

- With explainability, the tool might say: “We detected frustration because the child’s speech tone increased in pitch and they avoided eye contact.”

Benefits of Explainable AI for Special Needs Children 💡

- Transparency: Everyone understands the “why” behind the AI’s decision.

- Trust: Parents and teachers are more confident in the recommendations.

- Customization: Explanations highlight what’s working and what isn’t, making it easier to adjust support.

- Empowerment: Children feel included when they can understand why a system is suggesting something.

Table: Standard AI vs. Explainable AI in Special Education

| Feature | Standard AI | Explainable AI |

|---|---|---|

| Recommendations | Provides without context | Explains reasoning clearly |

| Parent Confidence | Low | High |

| Teacher Adjustment | Limited | Easier due to clarity |

| Child Engagement | Can feel random | Builds understanding |

Example Scenario: Reading Practice 📖

Imagine a 9-year-old with dyslexia using a reading app:

- Standard AI: Assigns a reading passage at grade level.

- Explainable AI: Assigns a slightly lower-level passage and explains: “This is chosen because the child struggled with word decoding yesterday, but excelled with comprehension questions.”

That explanation allows the teacher to reinforce decoding skills while acknowledging the child’s strengths.

The Research Backing Explainable AI 🧠

Studies show that AI without transparency often creates mistrust among educators and families. A Harvard study on explainable AI highlights that trust improves significantly when systems show reasoning. Similarly, UNESCO has emphasized the importance of responsible and transparent AI in education (UNESCO report on AI in education).

Challenges of Explainable AI 🚧

- Complexity: Some AI models are so advanced that simplifying explanations can be hard.

- Time: Teachers may not always have time to review detailed explanations.

- Accessibility: Explanations need to be simple enough for non-technical parents to understand.

These challenges highlight the need for user-friendly design in explainable AI tools for classrooms.

How Teachers and Parents Can Use Explainable AI 👩🏫👨👩👧

- Ask for explanations: When choosing apps, look for those that provide reasoning behind recommendations.

- Combine with human judgment: AI should support, not replace, teacher expertise.

- Involve children: Show students why the app makes choices. This helps them feel more in control.

Looking Ahead 🌍

As explainable AI becomes more advanced, we can expect:

- Clearer, simpler feedback in apps for children.

- Tools that support collaboration between parents, teachers, and therapists.

- Better identification of strengths and struggles for individualized education plans (IEPs).

For children with special needs, this means education that is not only smarter but also more transparent and inclusive.

Final Thoughts 🌟

Explainable AI is not just about smarter machines — it’s about making technology more human-friendly. For children with special needs, this means learning tools that don’t just deliver results but also explain them. That transparency builds trust, empowers students, and creates an inclusive educational environment where every child feels understood and supported.

FAQs

What is explainable AI in education?

Explainable AI refers to artificial intelligence systems that clarify the reasoning behind their decisions, helping teachers, parents, and students understand why a certain recommendation is made.

How does explainable AI support children with special needs?

It helps by providing transparency in learning recommendations, making it easier for teachers and parents to understand what strategies are working and what needs adjusting.

Can explainable AI be used in communication devices?

Yes, many speech-generating devices can benefit from explainable AI by showing why certain word or phrase suggestions are given, improving trust and communication.

What are the main benefits of explainable AI in special education?

The main benefits include transparency, increased trust, better customization of learning plans, and stronger collaboration between families and educators.

Are there any risks in using explainable AI?

The risks include potential data privacy concerns, complexity of explanations, and over-reliance on technology. Balancing AI use with human expertise is essential.