The Ethics of Artificial Intelligence in Special Needs: Why Human Connection Still Matters Most

Artificial intelligence is now part of nearly every conversation about therapy, education, and accessibility. Schools, parents, and therapists are looking at tools like chatbots, generative AI, deep learning AI, and platforms like ChatGPT OpenAI, Claude AI, or Midjourney AI to fill gaps in special education and therapy.

On one side, these tools promise speed, personalization, and availability. On the other, there’s a concern that too much reliance on machines can push aside the human touch that children and adults with special needs depend on most.

This article takes a closer look at how artificial intelligence fits into special needs care, what ethical issues come up, and why human connection can’t be replaced, no matter how advanced AI robots or chatbot AI become.

- Introduction to Artificial Intelligence in Special Needs

- The Ethical Questions Around Artificial Intelligence

- Where AI Helps the Most

- The Value of Human Connection

- Balancing AI and Human Roles

- Looking Ahead: Artificial General Intelligence (AGI) and Special Needs

- Final Thoughts

- FAQs

- Q1. Can AI replace therapists in special needs education?

- Q2. What are the risks of using AI chatbots with children?

- Q3. How is AI currently helping children with special needs?

- Q4. What’s the difference between AI and Artificial General Intelligence (AGI)?

- Q5. How can parents ensure AI use is ethical in therapy?

Introduction to Artificial Intelligence in Special Needs

Before jumping into ethics, it helps to understand how artificial intelligence in education and therapy is being used today. AI-powered platforms are being applied in multiple areas:

- Personalized learning tools – AI chatbots that adjust reading or math lessons for children with learning disabilities.

- Speech and communication aids – AI-powered voicebot and AI assistant tools like OtterAI that help children with autism or speech delays communicate better.

- Behavior tracking – AI systems that monitor student behavior to provide feedback to teachers and parents.

- Generative AI visuals – Tools like MidJourney, DALL·E, and Imagen AI help create visual learning materials tailored for students who need more pictorial support.

Real Numbers

- According to UNESCO, around 93 million children worldwide live with some form of disability that requires special support in learning and development UNESCO, 2020.

- The global market for AI in education is expected to grow from $4 billion in 2022 to nearly $30 billion by 2030 MarketsandMarkets, 2022.

These numbers show why AI is gaining attention. But they also raise questions about responsible AI and ethics.

The Ethical Questions Around Artificial Intelligence

When AI enters special education and therapy, ethical issues become very real. Let’s break down the main areas:

1. Privacy and Data Use

- AI tools collect sensitive data about children—medical records, emotional responses, even facial expressions.

- With platforms like OpenAI ChatGPT, Meta AI, or Google AI Chatbot, there’s always a concern about how data is stored and used.

- Parents want transparency about whether their child’s information is being fed into AI training datasets.

2. Over-reliance on AI

- If teachers and therapists lean too much on AI bots or AI chat online platforms, kids might lose out on the deeper relationships they need.

3. Bias and Fairness

- Many AI models are trained on broad internet data. That can include bias, stereotypes, or assumptions.

- In a therapy setting, even small errors can harm a child’s confidence or reinforce exclusion.

4. Accessibility vs. Replacement

- Tools like AI robots and chatbot AI online make therapy more accessible, especially in regions with few specialists.

- But there’s a fine line between filling gaps and replacing human experts.

Where AI Helps the Most

Despite the concerns, AI has clear benefits when used carefully.

| AI Tool | Use Case in Special Needs | Human Role Still Needed |

|---|---|---|

| ChatGPT 4 / ChatGPT OpenAI | Personalized learning, answering questions | Teacher verifies content, ensures emotional support |

| Midjourney AI / DALL·E | Creating customized visual learning aids | Educator explains context |

| AI Robots (Sophia Robot, Tesla Optimus) | Assisting with repetitive tasks, practice sessions | Therapist builds trust and emotional connection |

| OtterAI / Murf AI | Speech-to-text, language practice | Human ensures meaning and nuance |

| AI ML & Deep Learning AI | Behavior tracking, data analysis | Psychologists interpret results and adapt therapy |

This shows AI isn’t meant to stand alone. It’s strongest as a support tool.

The Value of Human Connection

Children with special needs often rely on trust, empathy, and human intuition—things no AI chatbot online can truly give.

- A therapist notices subtle changes in body language that an AI chatbot GPT might miss.

- A teacher adapts in the moment to a child’s mood, frustration, or joy.

- Parents offer comfort and cultural understanding no algorithm can replicate.

Studies back this up. A 2021 paper in Frontiers in Psychology highlighted that emotional bonding and social interaction remain the single most important predictors of progress for children with autism Frontiers, 2021.

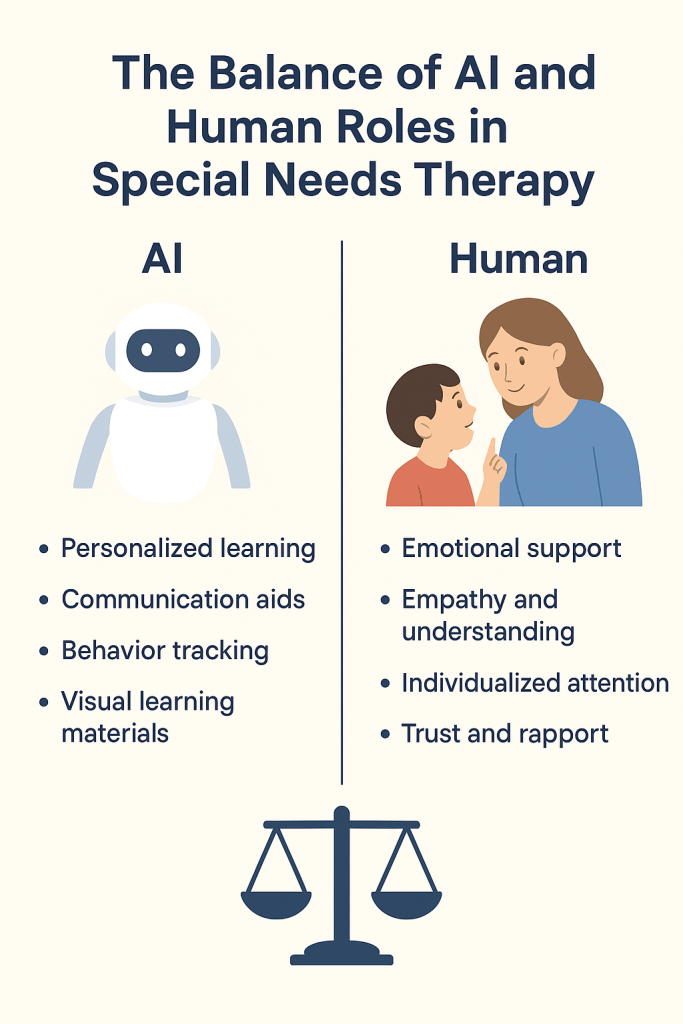

Balancing AI and Human Roles

Here are some principles to keep both ethics and efficiency in check:

- Transparency – Schools and clinics should explain how AI websites or tools like OpenAI API are being used.

- Human-first approach – AI supports, but never replaces, teachers and therapists.

- Bias testing – Regularly audit systems like AI in education platforms or Chatbot AI tools for bias.

- Ongoing training – Educators should learn how to blend AI use with human care.

Looking Ahead: Artificial General Intelligence (AGI) and Special Needs

Some technologists, including people like Demis Hassabis (DeepMind) and Ilya Sutskever (OpenAI), are pushing toward artificial general intelligence—AI that can reason and adapt like humans. While this raises hope for smarter interventions, it also magnifies ethical questions:

- Will general AI understand cultural, emotional, and individual differences?

- Could reliance on AGI reduce funding for human therapists and teachers?

- How should regulations define what AI can and cannot do in therapy?

Until there are answers, the safe path is keeping human AI collaboration balanced.

Final Thoughts

Artificial intelligence is not going away—it’s part of classrooms, therapy centers, and even homes. From chatbots to AI robots, the tools are becoming more powerful. Yet, special needs education and therapy thrive on something AI can’t reproduce: authentic human connection.

So while AI development should continue, parents, teachers, and therapists must protect that bond. The best results will come from a mix: let AI handle data, repetition, and personalization, but let humans bring empathy, care, and understanding.

FAQs

Q1. Can AI replace therapists in special needs education?

No. AI tools like ChatGPT OpenAI or Claude AI can support learning and therapy, but they lack empathy, intuition, and cultural understanding. Human connection is still central.

Q2. What are the risks of using AI chatbots with children?

Risks include data privacy concerns, exposure to biased content, and over-reliance on automated responses without human guidance.

Q3. How is AI currently helping children with special needs?

AI is used in personalized learning apps, communication tools, speech recognition, and behavior tracking. Tools like DALL·E or MidJourney AI also help create visual aids.

Q4. What’s the difference between AI and Artificial General Intelligence (AGI)?

AI usually focuses on narrow tasks, like a chatbot answering math questions. AGI, still under development, would aim to think and reason like a human across different areas.

Q5. How can parents ensure AI use is ethical in therapy?

Parents should ask about consent, how data is stored, whether the AI platform (like OpenAI ChatGPT or Meta AI) has bias audits, and confirm that human oversight remains central.