How to Use the 5 Applications of AI to Decode Your Child’s Non-Verbal Communication 🧩

Understanding non-verbal communication in children, especially those with limited speech or non-verbal tendencies, can be challenging for parents and caregivers. The advent of artificial intelligence has transformed this landscape, providing tools that decode gestures, facial expressions, and emotional cues. This guide explores 5 applications of AI that can help interpret your child’s non-verbal communication effectively.

- Understanding the Importance of Non-Verbal Communication in Children 🧠

- The 5 Applications of AI in Decoding Non-Verbal Communication 🤖

- 1. Facial Expression Recognition

- 2. Gesture Recognition

- 3. Emotion Detection in Voice Patterns

- 4. Behavioral Pattern Analysis

- 5. Augmentative and Alternative Communication (AAC) Tools

- Implementing AI Applications at Home 🏡

- Benefits of AI-Driven Communication Support 🌟

- Tips for Effective Use

- Conclusion 🌈

- FAQs

Understanding the Importance of Non-Verbal Communication in Children 🧠

Non-verbal communication includes facial expressions, body movements, gestures, and other cues that convey emotions, needs, or thoughts without speech. For children with autism, speech delays, or other communication challenges, non-verbal cues are often their primary method of interaction.

By leveraging 5 applications of AI, parents and therapists can:

- Identify emotional states through facial and body recognition.

- Monitor engagement and attention levels.

- Enhance interaction by responding appropriately to cues.

Research indicates that AI-driven analysis of non-verbal behavior can improve understanding and interventions for children with communication challenges (source).

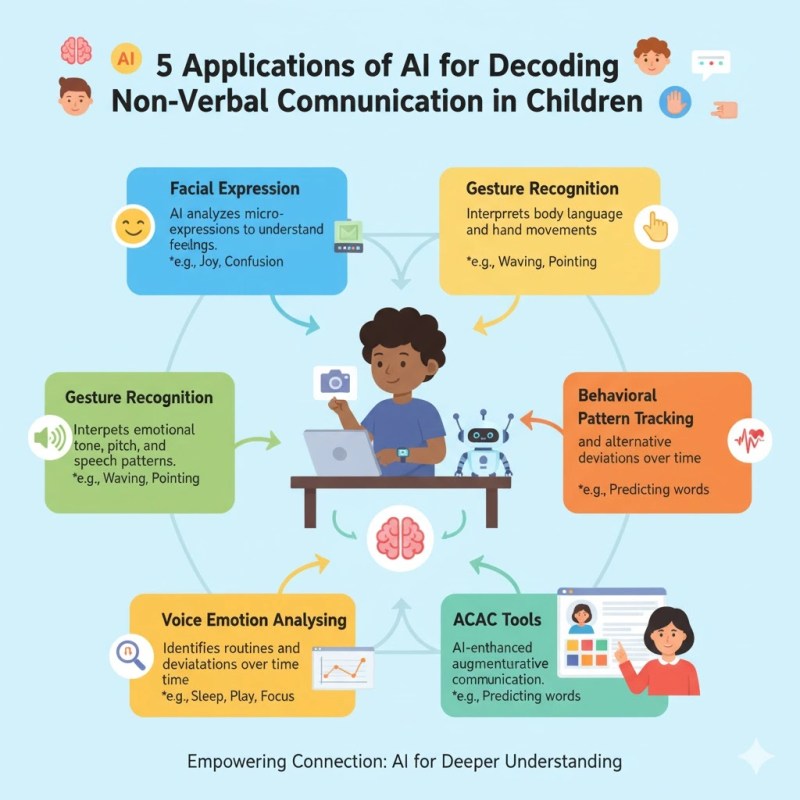

The 5 Applications of AI in Decoding Non-Verbal Communication 🤖

AI can be used in multiple ways to decode non-verbal communication. Here are the five most impactful applications:

1. Facial Expression Recognition

- Uses computer vision to identify emotions such as happiness, sadness, anger, or fear.

- Tracks micro-expressions that might be missed by human observation.

- Example tools: Affectiva, FaceReader.

2. Gesture Recognition

- AI sensors or cameras detect hand movements, body posture, or specific gestures.

- Converts physical cues into actionable insights, helping caregivers respond to needs.

- Can be integrated with tablet-based apps for interactive feedback.

3. Emotion Detection in Voice Patterns

- Even non-verbal children may produce sounds, intonations, or vocalizations.

- AI analyzes pitch, frequency, and tone to identify emotional states.

- Tools like Beyond Verbal use voice analytics to interpret emotions.

4. Behavioral Pattern Analysis

- AI monitors repeated actions or behaviors to identify patterns.

- Helps distinguish between expressions of stress, excitement, or engagement.

- Provides data-driven insights for therapists to adjust interventions.

5. Augmentative and Alternative Communication (AAC) Tools

- AI enhances communication apps with predictive text, symbol recognition, or gesture-to-speech translation.

- Supports both understanding the child and giving them a voice.

Implementing AI Applications at Home 🏡

Parents can integrate AI tools into their daily routines to support communication and development:

Step-by-Step Guide

- Select Appropriate AI Tools: Choose apps or devices suitable for your child’s age and abilities.

- Integrate into Daily Activities: Use AI tools during play, learning, or therapy sessions.

- Monitor and Record: Track responses, patterns, and emotions to adjust strategies.

- Adjust Based on Feedback: Use real-time AI feedback to guide responses and enhance communication.

Recommended AI Tools and Platforms

| Tool | Purpose | Key Features |

|---|---|---|

| Affectiva | Facial emotion recognition | Real-time emotion tracking, micro-expression analysis |

| FaceReader | Emotional analysis | Facial coding, expression classification |

| Beyond Verbal | Voice emotion analytics | Pitch, tone, and emotion detection |

| Proloquo2Go | AAC support | Predictive text, symbol-to-speech, gesture input |

| Tobii Dynavox | Eye-tracking communication | Eye gaze interaction, symbol-based communication |

Benefits of AI-Driven Communication Support 🌟

AI applications bring several advantages to decoding non-verbal cues:

- Enhanced Understanding: Accurately interprets subtle cues often missed by humans.

- Data-Driven Insights: Provides measurable information for therapists and caregivers.

- Improved Interaction: Supports timely and appropriate responses to children’s needs.

Tips for Effective Use

- Consistency: Use AI tools regularly to establish reliable communication patterns.

- Supervised Interaction: Always supervise to ensure accuracy and safety.

- Combination with Human Observation: AI should supplement, not replace, caregiver intuition.

- Sensitivity Settings: Adjust for children who may be overwhelmed by sensors or screen-based tools.

Conclusion 🌈

Leveraging 5 applications of AI to decode non-verbal communication can significantly enhance a child’s ability to express themselves and interact with the world. By integrating facial recognition, gesture detection, voice analytics, behavioral pattern analysis, and AAC tools, parents and therapists can gain deeper insights into emotional and social needs, fostering more effective communication, understanding, and development.

FAQs

1. What are the 5 applications of AI in non-verbal communication?

They include facial expression recognition, gesture recognition, emotion detection in voice, behavioral pattern analysis, and augmentative and alternative communication tools.

2. Are AI tools safe for children?

Yes, when used under supervision and with appropriate settings, AI tools are safe and help enhance communication.

3. Can AI replace a therapist in decoding non-verbal cues?

No, AI complements human observation by providing data-driven insights, but professional guidance remains essential.

4. How do AI tools help parents understand emotions?

AI tools analyze facial expressions, gestures, and voice patterns to detect emotions, providing real-time insights for responsive interactions.

5. Are there apps that combine multiple AI functions for communication support?

Yes, platforms like Proloquo2Go and Tobii Dynavox integrate gesture recognition, predictive text, and symbol-based communication to enhance non-verbal interaction.